How to Become a DevOps Engineer in Six Months?

What is DevOps?

DevOps is a mix of development (Dev) and operations (Ops). Beyond that, it’s all the connected tools and disciplines of both of those business areas that let an organization deliver services and software applications at high speed, so they can better serve their customers.

The Microsoft-approved definition of DevOps is “the union of people, processes and technology to continually provide value to customers.” Both definitions are open to interpretation, but DevOps centers around writing code that pulls together customers (internal and external), and business processes.

Sometimes, DevOps engineering means just “being that go-to employee” who can quickly and efficiently write code to address an engineering issue. In other words, in some organizations, DevOps is the indispensable IT employee who knows how to write effective code.

Path to become a DevOps engineer

The question of how to become a DevOps engineer has a relatively straightforward answer. With that said, you’ll need to bring a few things to the table. First and most important to the DevOps career path is a passion for learning, knowledge, and logic.

Who can become a DevOps engineer?

DevOps engineers need to be able to read between the lines in their customers’ requirements. They also have to produce software and services that meet those requirements in a usable, testable form. Since development doesn’t happen in a vacuum, you’ll also need leadership and management skills, along with a cool head under pressure.

It’s not all about tools!

When most DevOps hiring managers look for a new employee, they’re more concerned with mindset than with tools. If you’ve got a tech background, you’re willing to learn, and you’re an engineer at heart, you’ve already got the basics of a DevOps career.

DevOps engineers are curious, constantly improving their skillsets, and focused on lifelong learning. So while you can build the core skillset in a few months, your main driver should be on learning, with a goal of providing massive value to your next employer.

Learn to understand systems and processes, and you have the right mindset. That mindset will help you learn how to start a career in DevOps, and more important, how to be a good DevOps engineer.

How long does it take to become a DevOps engineer?

It takes about six months to become a DevOps engineer, assuming you have some basic Linux admin and networking skills, and that you apply the DevOps engineer learning path outlined below. With that said, that career won’t just happen overnight. The length of time required depends on several factors, including your mindset, your current skill level, and your career position.

With that caveat, there’s no shortage of free tools and resources you can use to help you on your journey. Some professional DevOps engineering sites even offer free or vastly reduced exams to help you grow and prove your worth. Let’s dig into how to become a DevOps engineer, starting with the tools and skills.

What skills will I need?

To become a DevOps engineer, at the bare minimum you’ll need basic Linux admin and networking skills, plus some scripting fundamentals, along with the following DevOps skills:

1. Intermediate to advanced Linux skills

In DevOps, you’re not installing a server once and then logging in every now and then to perform a few admin tasks. You need to understand how to create highly customized Linux images from the ground up, both for VM and container use cases — unless you plan to become a Windows Server DevOps engineer.

2. Intermediate networking skills

In DevOps there’s no “network team.” All network resources are software-defined. In other words, networks are part of infrastructure as code. At a bare minimum, you’ll need a solid grasp on the OSI model, IPV4, subnetting, static and stateful firewalling, and DNS. These skills are usually included in advanced cloud certifications.

3. A commitment to at least one cloud

Clouds aren’t merely managed data centers. In order for you to automate workloads in a given cloud (AWS, Azure, GCP, etc.), you need a firm grasp of their specific semantics. You’ll need to know what resources are available, how they’re organized, and what properties they have.

4. Infrastructure automation

Once you understand the resources (and their properties) applicable to a cloud, you’re ready to automate their creation using tools such as Terraform and Ansible.

5. SDLC, CI/CD pipelines, and scripting

In DevOps, we deliver infrastructure in a similar way to applications. So — you’ll need to be acquainted with the fundamentals of the software development life cycle (SDLC). This includes versioning strategies using source control code management systems like Git, and CI/CD pipelines such as Jenkins, and CircleCI. Advanced automation tasks may prove difficult through shell scripts alone. You’ll often require more powerful scripting using the likes of Python, Perl, or Ruby.

6. Container technology

For legacy workloads you may automate the creation of a VM image. But for new applications you’ll be working with containers. As such, you need to know how to build your own Docker images (Linux skills required!) and deploy them using Kubernetes. FaaS technology like AWS Lambda also uses container technology behind the scenes.

7. Observability technology

While all clouds have monitoring dashboards and standard “telemetry” hooks, most large employers use third party (both commercial and open source) monitoring tools such as Prometheus, DynaTrace, Datadog, or the ELK stack.

Beyond that skills list, tool-building in DevOps requires a fundamental understanding of logic, its application, and how to apply it in a computer-recognizable form. While that may sound a tad scary for the uninitiated, there are several good books that cover programming fundamentals without using any specific language. Here are a couple of my favorites:

- Learning Python (O’Reilly)

- Learn Windows PowerShell in a month of lunches (Jones, Hicks — A personal favourite as I love PowerShell)

Now let’s dig into the nuts and bolts of how to become a DevOps engineer — starting with education.

What education do I need?

One of the great things about DevOps is that it’s about what you can do, not what qualifications you have. Some of the best DevOps engineers in the field are self-taught, with little in the way of formal higher education. The biggest requirement is motivation and an interest in DevOps engineering.

With that said, you’ll have a much easier time both learning DevOps skills and getting a company to hire you if you have a bachelor’s degree in software development, IT, or a related field.

Don’t take forever to get trained

Start your DevOps engineer roadmap by looking through the skills list above. If you already have some of those skills — great. If not, be honest about the time you’ll need to spend to learn them. But don’t stress about getting everything perfect before you start. If you wait for mastery, you’ll never get a DevOps job.

Start by learning a few of the easy-to-learn skills. If you’re already employed in a non-DevOps job, start working on some DevOps projects now, to build mastery and proof you have the skills. Then make the switch to a full-time DevOps career.

You may even find that your own company has DevOps openings you could move into. Keep a keen eye on internal and external vacancies alike.

Here are the DevOps skills you’ll need

Let’s take a deeper look now at how to become a DevOps engineer — the DevOps career path and how to build the skills. We’ll share the reasons each of these tools is important, and how long it’ll take to learn each one. We’ll also point you to some good online classes and certifications.

You can learn most of these skills on the job — but a word of caution. In the sink-or-swim world of DevOps career growth, different companies have different requirements. There’s no one-size-fits-all approach.

Foundation knowledge: 4 months

We’ve put a plus-sign after each of the time frames below, because while you can learn the basics quickly, mastery can take much longer.

1. Intermediate to Advanced Linux and Networking: 1 month+

Linux is the OS and server platform of choice for DevOps engineers in companies of any size. Linux’s open-source nature, small operational footprint, and support from the Likes of Redhat and Ubuntu make it the go-to not only for DevOps, but for tool building in general. One of the best things about Linux is that you can download it and start using it today.

If you feel that your Linux skills are rusty, you can get started with the free course offered by Udemy. In fact, if you want to learn how to become a DevOps engineer exclusively from Udemy, they have an entire curriculum of core DevOps classes.

In terms of networking, you’ll get the necessary skills if you do an intermediate cloud certification, such as AWS Certified Solution Architect, but it helps if you take a specialized course such as The Bits and Bytes of Computer Networking on Coursera.

2. Advanced Scripting: 2 months+

First of all, you’ll always need shell (e.g., bash) scripting skills, because this is the default for Linux and most tools.

For “advanced” scripting use cases, there are quite a few languages out there, but Python is a good start if you don’t know what scripting language to pick.

You can master Python in as little as two months with online tutorials from LearnPython.org. However, you’ll find that many employers also use other languages such as Perl and Ruby as well, so be ready to learn those, if need be.

3. Cloud Training and Certification: 1 month+

AWS is the 600lb gorilla in terms of agile cloud providers, and AWS and Linux go together like Strawberries and cream. You’ll need to be fluent in AWS before you can call yourself part of the DevOps community.

The beauty of AWS and cloud development in general is that you only pay for what you use. That model makes cloud computing ideal for DevOps testing. You can set up an environment quickly, use it for what you need, then pull it down again.

It’s easy to start using AWS, since there’s a 12-month free version available to anyone who signs up. You can learn professional-grade skill in AWS in as little as one month, though mastery can take years of continual on-the-job use. Get your AWS certification here.

4. Google Cloud: 1 month+

Azure offers similar employment opportunities to AWS, but what about GCP?

The Google Cloud Platform (GCP) is smaller than AWS and Azure but it excels particularly in data mining and artificial intelligence (and other deep learning technologies). Google’s DevOps-related offerings are becoming increasingly popular with large companies.

In the banking industry for example, the Google AI/ML tools are creating new ways of doing business, plus adding fraud detection and usage-pattern tracking. This saves huge amounts of time trying to develop similar tools in-house.

Similarly, other large companies are using Google’s ML tools to bring massive data sets down to size, drawing business-driving insights from previously unmanageable seas of data.

Want to know more about how to become a DevOps engineer with Google Cloud? You can get your Google Cloud certification here in three months, though you can learn to develop applications with Google Cloud in as little as one month.

Skills: 1 month

It doesn’t take long to learn the DevOps skills you’ll need to succeed in your new career. All these tools are free to use and experiment with. They just require a little time and effort on your part. Let’s look at how long it takes to learn the basic DevOps tools like Terraform, Git, Docker, Jenkins, ECS, and ELK Stack.

1. Configure: Terraform (and Ansible) — 1 week+

Configuration management is at the heart of fast software development. Poorly configured tools waste time, while well-configured tools save it.

As its name implies, Terraform has one purpose in life — to create infrastructure as code in an automated way that speeds up your entire process.

Ansible concerns itself with server-desired state configuration, ensuring that servers are configured to specs. These two technologies are cornerstones of DevOps. Both may seem complex at first, but they’re all based around configuration files written in YAML.

Terraform takes about a week to learn the basics. Udemy offers a great online class that bundles AWS, Terraform, and Docker.

2. Version control: Git and GitHub (GitLab) — 20 minutes+

Version control is key to any DevOps endeavour. It lets DevOps Engineers and their team members create and review code faster, without wasting time sharing endless files and iterations.

Git is a standalone product that by default is used on local machines and networks. This is different from GitHub, which facilitates version control in the cloud, with the overhead managed by GitHub itself. In the world of infrastructure as code, version control with products like Git and GitLab are essential.

GitLab is a complete open-source DevOps platform. It helps users deliver software faster, with collaboration and security all rolled into one. Looking to learn more about how to become a DevOps engineer with Git? You can learn the basics of Git in minutes if you’re already a programmer.

3. Package: Docker (Lambda) — 3 days+

Packaging is where build management meets release management. It’s where your code and infrastructure come together for deployment.

Without Docker there would be no DevOps. Docker essentially allows DevOps to run code in small isolated containers. That way, building services and replacing services becomes simpler than updating everything in one go (which is very non-DevOps).

Amazon’s Lambda is an alternative to Docker that many companies use instead. Though it’s best to know both tools, Docker is an excellent starting point. You can learn Docker in just a few days. Udemy offers a solid beginner’s course online for DevOps. They also have a Docker class bundled with Kubernetes.

4. Deploy: Jenkins (CodeDeploy) — 2 days+

During deployment, you’ll take your code from version control to users of your application. Automation is a key component of this step, and Jenkins is the central way to automate.

Jenkins allows automation for all manner of tasks, including running build tests and making decisions based on whether code passes or fails the build process. You can also use Jenkins for more mundane purposes, like centralized management of scripts and executing commands via SSH (and other authentication pathways).

It’s a tool to automate those frequent and boring tasks that computers can do better than even the best DevOps engineer could. Some companies choose CodeDeploy over Jenkins, making it another useful DevOps tool to learn.

You can learn to use Jenkins in just a few days. Udemy offers a great Jenkins class online for DevOps engineers.

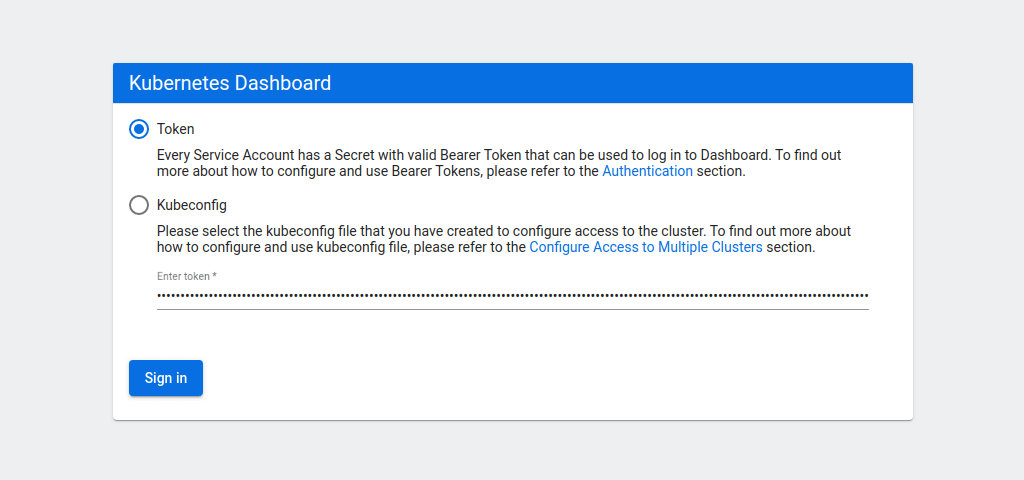

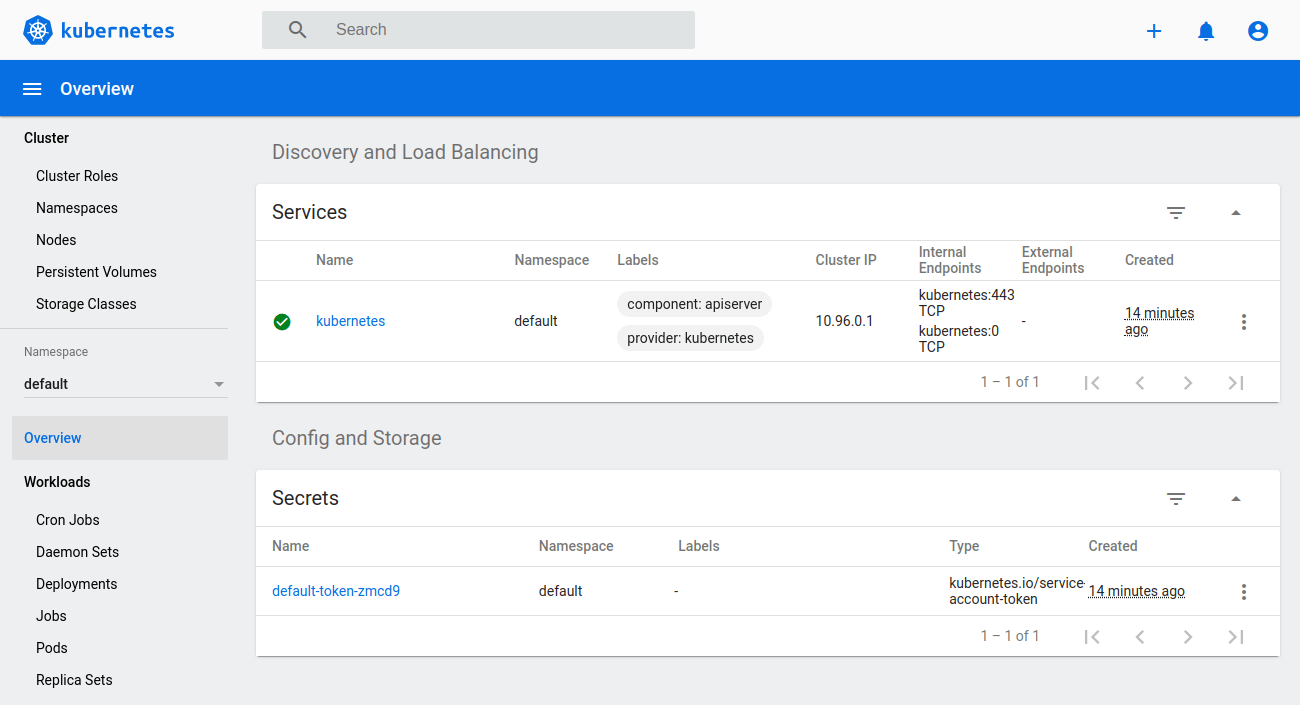

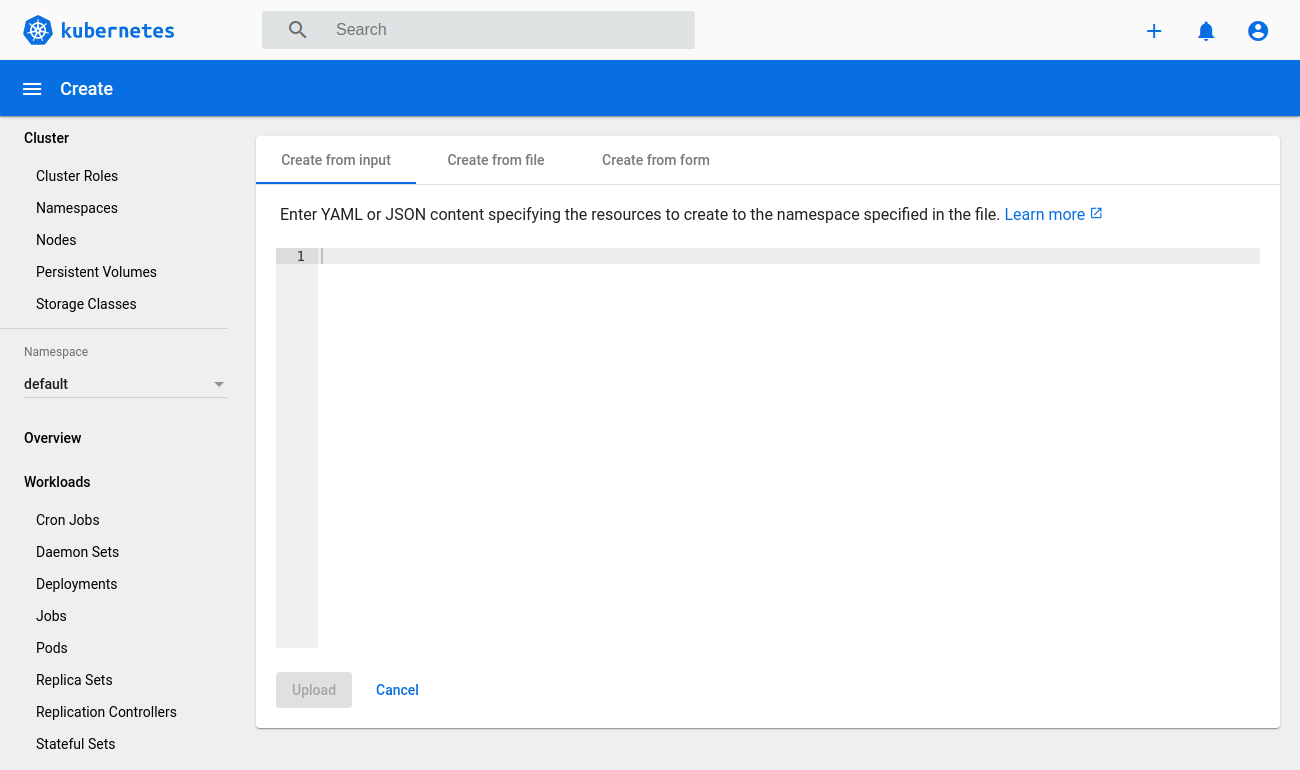

5. Run: ECS (Kubernetes) — 1 day

Kubernetes is DevOps bread and butter. It starts with Docker and adds extra functionality and tools. For instance, it lets the administrator ensure that several copies of a container image are running. That way, if a single VM or host is lost, the service is still available.

ECS and Kubernetes perform valuable services like this in the background. They deliver several automated DevOps tools that allow useful additions to manage containers, and their availability. They also add important items such as introducing role-based access control and more centralized auditing and management functionality.

See IBM’s Kubernetes learning path and guide for a 13-hour course.

6. Monitor: ELK Stack (Prometheus) — 2 days

Once your new application is up and running, you’ll need a real-time view of its status, infrastructure, and services. To this end, DevOps engineers love ELK.

ELK provides all the base components for effective log management and search functionality. It’s Elasticsearch, Logstash, and Kibana — three open source applications offered by the Elastic company.

ELK takes data from multiple sources, and lets you visualize it by using useful charts and graphs. Its rival platform, Prometheus, is just as important for a DevOps engineer to understand. You can learn to use the ELK Stack in just a few days with Udemy’s 4-star online class.

Those are the basics of how to become a DevOps engineer. Now let’s look into why Git matters so much, and how to get a DevOps job.

GitHub matters

One more word on GitHub as a shortcut to starting a career in DevOps. GitHub is essentially the CV of the DevOps world. Any DevOps hiring person will check out your GitHub profile as a very first step and point of contact. Yet it’s easy to learn GitHub and other DevOps tools while you create your virtual CV at the same time.

How to get a DevOps job in 1 month+

Knowing how to become a DevOps engineer doesn’t stop with skills. The next step in your DevOps engineer career path is getting the job. That sounds daunting, but if you’ve got software development experience, the skills above, and a few DevOps achievements for your resume, you’re well on your way to getting hired.

Here’s how to get into DevOps.

Rewrite your DevOps resume

The first step in getting a DevOps job is to rewrite your resume. A DevOps resume doesn’t need to show years of experience. As this excellent DevOps resume guide shows, start with a reverse-chronological format. Then in your work history, education, and projects section, list achievements, including:

- Development tasks from past jobs

- Side projects in development, IT, Agile, scripting, or automation

- Volunteer work in coding or distribution

- Projects in Terraform or other DevOps tools

- Linux/Unix projects

- Scripting in Python or Ruby

- Tasks completed with AWS, Jenkins, Maven, etc.

Start each resume bullet point with an action verb like developed, wrote, created, built, deployed, etc. And use numbers to show how many projects, deployments, scripts, tests, containers, and how many customers, team members, etc.

The more you show DevOps achievements in your history, with measurable details, the higher your chance of getting hired. Knowing how to become a DevOps cloud engineer is all about showing your projects and accomplishments.

Apply to lots of DevOps jobs

Finding a DevOps job is a numbers game. If you apply to three jobs, you won’t hear back from any. If you apply to 50, you’ll get a few responses and maybe an interview. Plan to hear back from about one in every 30 applications, and get interviewed by one in every 100.

In other words? You’ll need to apply to a lot of DevOps jobs. Probably something like 300 in a month to get one job (about 14 every weekday). But — you can vastly boost your chance of getting hired if you lean on networking. The easiest way? Start connecting with DevOps engineers on LinkedIn.

Then — don’t ask them for a job. Just ask if you can chat with them about their cool career. About 20% will be glad to share their success. You’ll learn tons about how to start a career in DevOps. And surprise surprise — some will even introduce you to their contacts.

Shun the unicorns

Don’t worry about being front-and-center in a DevOps job at Google, Amazon, or another giant company. Everybody clamors to get hired at those firms, creating stifling competition. Don’t be afraid to pursue a DevOps job at a less glamorous firm. Once you’ve got a little experience under your belt, then you can go unicorn hunting.

Consider working your way up

Is DevOps a good career for freshers? Not really. Don’t count on landing an entry-level DevOps job. DevOps is, by nature, an advanced position that requires highly skilled candidates. But — don’t let that discourage you. One of the best DevOps career paths is to start as a software developer or IT specialist in a company that also hires DevOps engineers.

If your current employer doesn’t hire DevOps pros, consider switching to one that does. Don’t stay entry-level for long. Three to six months is plenty. Once you’ve logged that time, commit to applying internally to DevOps positions in your new company. During your entry-level tenure, work to build accomplishments that look good on a DevOps resume.

Summary

Those are the basics of how to become a DevOps engineer. Though becoming a DevOps engineer takes persistence and passion, it’s not rocket science. Anyone with the drive (and a little time) can follow the DevOps career path, learn the necessary skills in five months, and get a DevOps job in one month. With the right skillset and job search strategy, you can be in your DevOps dream job very soon.