Introduction:

The role of an AWS DevOps Engineer is in high demand as more organizations adopt cloud computing and DevOps practices. To help you prepare for your next AWS DevOps Engineer interview, I’ve compiled a list of common questions categorized by difficulty level: basic, medium, and hard. In this blog post, I’ll provide sample answers to these questions to help you sharpen your knowledge and increase your chances of success.

Basic Level Questions:

1. What is AWS Elastic Beanstalk, and how does it work?

Answer: AWS Elastic Beanstalk is a fully managed service that simplifies application deployment on AWS. It handles capacity provisioning, load balancing, and application health monitoring. You upload your application code, and Elastic Beanstalk takes care of the rest, including resource management and scalability.

2. Explain the concept of Auto Scaling in AWS.

Answer: Auto Scaling automatically adjusts the number of EC2 instances in an Auto Scaling group based on predefined conditions. It helps maintain the desired number of instances to handle varying workloads. Scaling policies define conditions like CPU utilization or network traffic, triggering scale-in or scale-out actions.

3. What is the purpose of AWS CloudFormation?

Answer: AWS CloudFormation allows you to define and provision AWS infrastructure resources in a declarative manner using templates. It simplifies infrastructure management by automating provisioning, updates, and deletion of resources. CloudFormation ensures consistency and reduces manual effort in managing infrastructure as code.

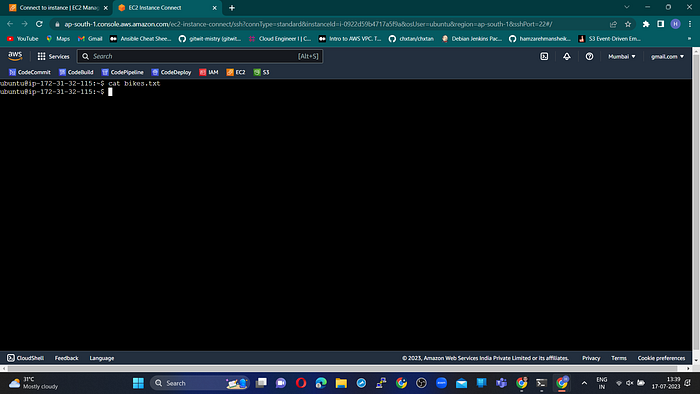

4. What is the difference between EC2 and S3 in AWS?

Answer: EC2 (Elastic Compute Cloud) is a virtual server that enables scalable computing capacity, while S3 (Simple Storage Service) is a scalable storage service for storing and retrieving data as objects. EC2 is used for running applications and processing workloads, whereas S3 is designed for storing and accessing large amounts of data.

5. How do you secure data at rest in AWS?

Answer: AWS provides various mechanisms for securing data at rest. You can encrypt data stored in services like S3 and RDS using server-side encryption. AWS Key Management Service (KMS) allows you to manage encryption keys. Additionally, implementing appropriate IAM policies and access controls ensures secure data access.

Medium Level Questions:

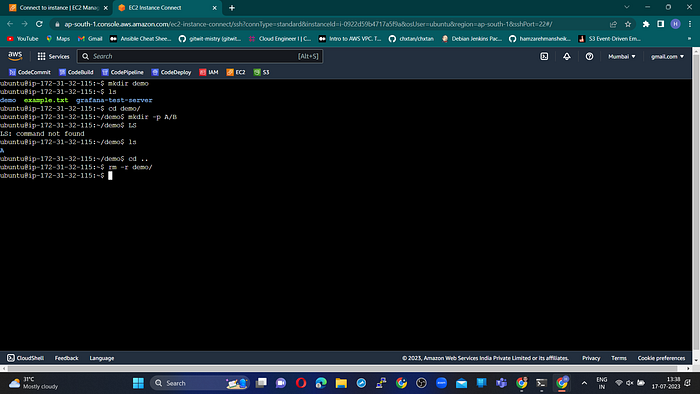

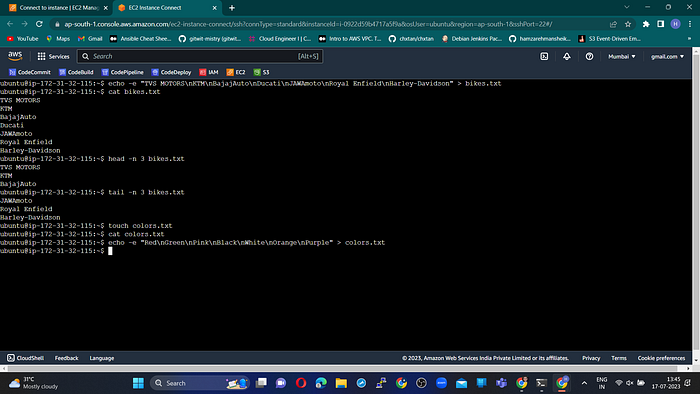

1. Describe the steps involved in setting up a CI/CD pipeline on AWS.

Answer: Setting up a CI/CD pipeline on AWS involves steps like storing code in CodeCommit, using CodeBuild for building applications, configuring CodePipeline for orchestration, integrating testing tools, provisioning infrastructure with CloudFormation, automating deployment, and monitoring the pipeline with CloudWatch.

2. What is the difference between AWS CodeCommit and AWS CodePipeline?

Answer: AWS CodeCommit is a source control service for storing and versioning code, whereas AWS CodePipeline is a continuous delivery service that automates the build, test, and deployment stages. CodeCommit enables collaboration, while CodePipeline automates the release process by connecting various AWS services.

3. How do you monitor AWS resources for performance and operational issues?

Answer: AWS CloudWatch is used for monitoring AWS resources. It provides metrics, logs, and alarms for services, allowing you to visualize performance data, set alarms, and gain insights. CloudWatch Events triggers actions based on events. AWS X-Ray enables distributed tracing and performance analysis.

4. Explain the concept of blue/green deployment and how it can be achieved in AWS.

Answer: Blue/green deployment is a release management strategy for zero-downtime deployments. Two identical environments, “blue” and “green,” are created. The green environment is updated with a new version, tested, and validated. Once verified, the router or load balancer is switched to route traffic to the green environment, facilitating a smooth transition.

5. How would you architect a highly available and fault-tolerant system on AWS?

Answer: Designing a highly available and fault-tolerant system on AWS involves distributing the workload across multiple Availability Zones, using services like Route 53 and Elastic Load Balancing for traffic distribution, implementing data replication and backups, utilizing Auto Scaling, and monitoring with CloudWatch.

Hard Level Questions:

1. Discuss the challenges you might face while implementing a serverless architecture on AWS and how you would address them.

Answer: Challenges in serverless architecture include managing cold starts, optimizing function response times, handling distributed architectures, ensuring data consistency, managing service limits, and implementing security controls. Techniques to address these challenges include optimizing configurations, caching, event-driven architectures, and utilizing AWS service-specific best practices.

2. Explain the concepts of AWS Identity and Access Management (IAM) roles, policies, and permissions.

Answer: IAM roles define permissions for entities like AWS services or users. Policies are JSON documents attached to roles, users, or groups, specifying allowed or denied permissions. IAM enables the principle of least privilege, controlling access to AWS resources at a fine-grained level.

3. How would you design a multi-region deployment strategy for high availability and disaster recovery in AWS?

Answer: Designing a multi-region deployment strategy involves deploying resources across regions, implementing data replication mechanisms, utilizing global traffic distribution services, automating failover mechanisms, and regularly testing the disaster recovery plan to ensure effectiveness.

4. What are the best practices for securing an AWS infrastructure and ensuring compliance with security standards?

Answer: Best practices for securing AWS infrastructure include enforcing least privilege, strong password policies, and MFA. Regular patching, encryption of data in transit and at rest, utilizing security features like Security Groups and WAF, and conducting security assessments are important for compliance.

5. Describe the process of migrating an on-premises application to AWS, including the challenges and considerations involved.

Answer: Migrating an on-premises application to AWS involves assessing dependencies, selecting appropriate AWS services, planning the migration strategy, setting up networking infrastructure, migrating data, testing thoroughly, updating DNS records, and monitoring performance and cost in the cloud environment.

Conclusion:

Preparing for an AWS DevOps Engineer interview requires a solid understanding of AWS services, DevOps practices, and infrastructure management. By studying and practicing these questions and sample answers, you’ll be well-equipped to tackle the interview and showcase your expertise. Remember to supplement these answers with your own experiences and insights. Good luck with your interview